RenderDoc's Early History

As near as git history will determine, RenderDoc began its life on Wednesday 27th June, 2012. The idea had been rolling around in my head for a short time before then but I didn't do any meaningful work before that day.

I'm writing this 5 years later to the day in 2017, and I've got that lovely nostalgic feeling going on. This is as good a time as any then to write down how RenderDoc came to be before I forget the details. Some of this I've mentioned to people in the past, some is only known to those few who put up with me through the early growing pains.

Motivation

In 2012 I was working at (what was then) Crytek UK, the studio that was largely made up of ex-Free Radical staff. The first game studio I'd worked at had gone bust, and I'd somehow blagged my way onto the R&D team, starting there just at the end of 2010. After shipping Crysis 2, work started on Crysis 3 as well as many at the UK studio going back to a project that had been ongoing for a while - the PS3 & Xbox 360 editions of the original Crysis.

For a fair while I was the only dedicated graphics person on the R&D team working on C1C (Crysis 1 for Consoles) so I did a lot of debugging different issues that arose. Because of the nature of the project the PC code was only maintained to run the editor, so all of my debugging happened through the console-specific tools.

Once that shipped in late 2011 those of us on the C1C team moved to some other internal projects, before eventually all coming onto the Crysis 3 project. The UK studio was largely in charge of the multiplayer modes in both Crysis 2 and Crysis 3, with the Frankfurt studio helming the single player. However there was always a certain amount of crossover as many core game systems were shared, and for R&D we had the greatest amount of sharing since the underlying CryEngine technology was identical.

By the middle of 2012 though I was starting to get frustrated with one thing in particular - any time I had to investigate a graphical issue, the first thing I'd do is try and reproduce it on a console devkit. This wasn't because I found it more convenient, as there was a bit more setup time than running a PC build, it's because the tools on console were far and away superior. Any PC-only bug was exasperating as it meant trying to make do without good tools.

At the time there were a few options for graphics debugging on PC. Each IHV had their own tool - Intel had GPA (a standalone product at the time), nVidia had nSight (largely the same as it is today), AMD had GPUPerfStudio (likewise quite similar to today's incarnation), and Microsoft had two tools - the aging 'PIX for windows'. No not that PIX for windows, the other PIX for windows. It also had the Visual Studio graphics debugger, released for the first time in the brand-new VS2012.

Without going in to 5-year-old criticisms, none of these tools were useful and reliable. Partly due to difficulties handling a large project like Crysis 3, partly due to inherent frustrations with the UI, e.g. being embedded in visual studio.

So I did something that many programmers will find familiar. I muttered some famous last words to myself and decided to build my own tool.

It can't be that hard!

Proof of Concept

Thus some time in late June I started thinking about how I'd write my own tool. The principle seemed fairly simple to me - you would man-in-the-middle intercept all D3D11 calls in the frame, then replay them back up to wherever in the frame you wanted to inspect, and look at the textures or buffers. Easy!

What's funny looking back is that it both was and wasn't easy. At the coarsest level this is still how RenderDoc works even on other APIs, but on the other hand the devil really is in the details. There's a ton of complications and implementation-level headaches, leaving aside the UI which is at least half of the difficulty.

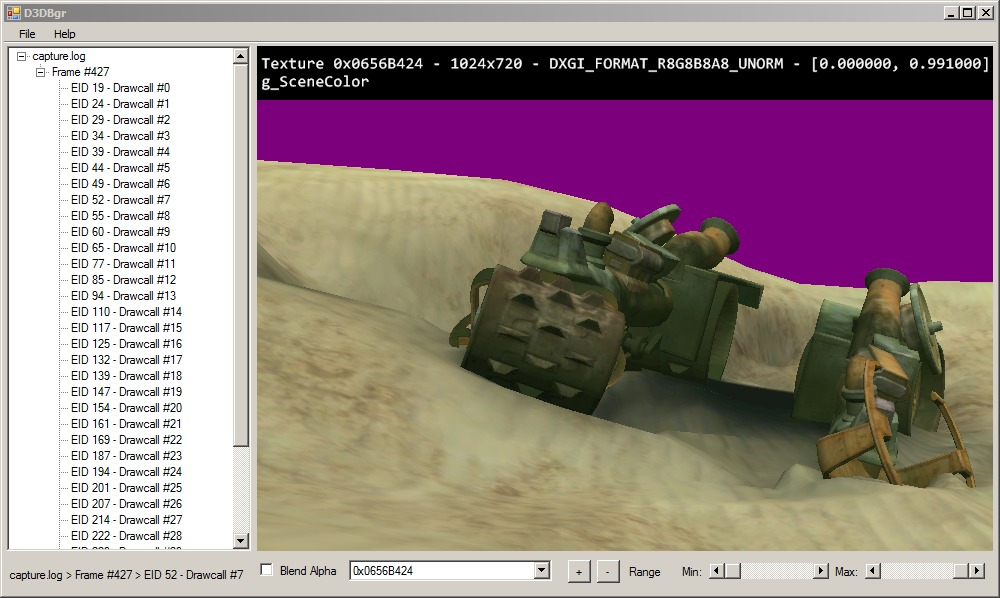

Within a week or so, I had a very simple text dump of the functions being called for a single frame. After a couple of weeks I had enough capturing to hook up an incredibly hacked-together visualiser that just had a texture display of a single pre-selected texture, and a textbox where you could enter the event to display at. After verifying that I really could see the texture updating I expanded the UI a little bit to this:

It certainly wasn't pretty but this was the first thing that let me actually see the interim results of rendering by doing a partial frame replay. The drop-down on the left selects the render target to view (the number is the 32-bit pointer value of the D3D11 texture handle), the drop-down on the right lists all of the drawcall events so you can chose them or scroll through with the mouse-wheel.

At the time, the project wasn't called RenderDoc. The original name I coined for it was kind of silly (and short-lived): D3Dbgr, pronounced as Dee Three Debugger. It was both a slurred-together pronounceable acronym, and a play on bgr as the primary colours in a pixel.

I even had an awesome programmer art logo:

Up until this point I hadn't mentioned the project to anyone as I wasn't sure whether or not I was going to come up against some obstacle I couldn't get around. Also perhaps more likely I didn't know whether I'd run out of steam and just drop it. This was after all just a little fun hobby project in my spare time, I wasn't even sure if I'd be able to make it good enough to actually use at work.

Unfortunately I don't recall exactly when I first showed the project to the other graphics people in the office. I think it was around the time of the above UI just as a proof-of-concept demo, but I don't have any record of exactly when.

I didn't try to sell it as a PIX-killer (PIX for windows being the tool we commonly used on PC, and also the tool we used on Xbox 360), but just as the side-experiment that it is. With the core capture and replay in place, the first thing I did was start to iterate on the UI and get feedback when possible. Rather than trying to perfect the replay (which had numerous holes and problems) I made do with it being good enough for the single demo I was playing with.

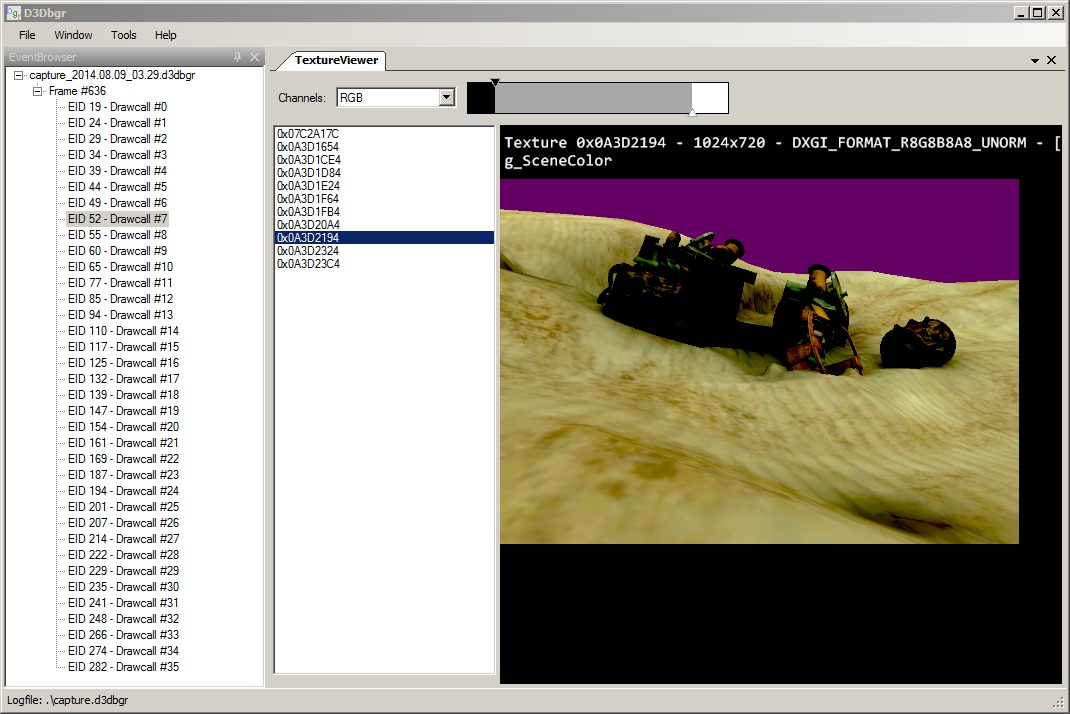

The next version of the UI was fairly similar, but had a more familiar frame browsing interface:

You can also see the beginnings of the range control, allowing for the selecting of the minimum/maximum values that should be mapped to black and white. At this point the layout and panels are still fixed, as this was the easiest way to create the UI quickly.

I still remember the thrill I got when I finally had this working and I could step through drawcalls. Although it really wasn't much code and it was a simple concept, it felt like magic.

I think that's an important lesson to learn, and it's one a lot of people in graphics programming are familiar with - sometimes a hugely complex system or process can actually be a lot easier to understand than you think. You might have to take it in bite-sized chunks and understand it incrementally, rather than trying to comprehend the whole complex system all at once, but it's manageable. There is rarely if ever any truly byzantine magic going on, there's just a lot of simple building blocks that come together. Sometimes it's more than one person can hold in their head, but usually there's always a way to have a general idea of the whole system and detailed understanding of part of the system.

It was clear though that the current fixed layout wasn't going to scale, and I knew from the past that it was annoying to end up in a situation where you couldn't see the data exactly as you wanted. Sometimes forcing you to flick back and forth between screens, or export data to disk to compare externally.

As an example of how things can seem more complex than they are - it was roughly 2 weeks, give or take, to make this simple hacked-together UI and capture-replay loop. It took about the same length of time to just change the UI so that instead of being a fixed panel layout, it had dockable panels using a C# docking toolkit.

Never underestimate UI and tooling. It's way more complex than you think just to implement it at all, let alone doing a good job with the UX that users will actually enjoy using. The job doesn't finish when you've implemented the underlying code that does the capture or replay. Even the fanciest technology in the world isn't much use if the UI on top of it is bare-bones and painful to use - the UI is what people actually interact with day to day, so it can't be dismissed as just window-dressing.

I don't remember what happened to v0.1 and v0.2, but I have a git tag on v0.3 about a month into the project, on 29th of July 2012.

At this point I started bringing in demos to the office to show around to more people. There wasn't much to see still and being able to visualise intermediate render targets is nice but is rarely enough. By now I was confident that I could stick with the project and continue working on it. It still wasn't assured that this could be a tool I could rely on day to day, but at least I would have the interest to keep going.

From here I switched track and worked on the back-end more. In order to be a useful debugger I needed several things - more detailed inspection of textures (picking specific pixel values for example), inspection of shaders, buffers, and constants, and most of all a detailed view of all pipeline state.

There are lots of features you can also have in a graphics debugger like shader debugging and pixel history, but this is the core minimum feature set that needs to be present - being able to see all mutable state at any given point.

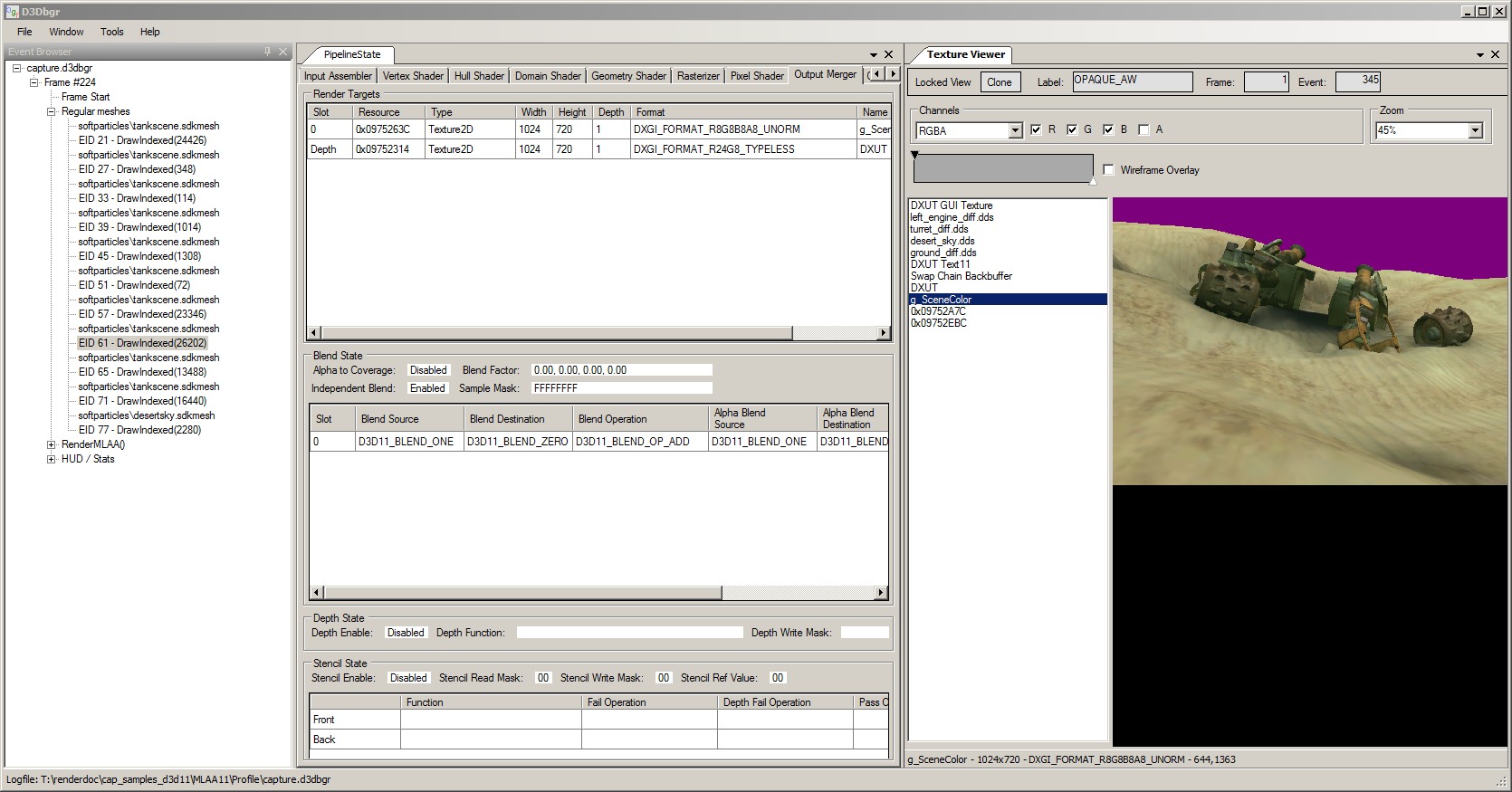

By the middle of September I had this roughly completed, and you begin to see a more recognisable ancestor of RenderDoc:

The eagle-eyed among you might notice one thing that doesn't belong, the bar at the top of the texture viewer containing information about the current event. This was placeholder UI that was never implemented, although it's a concept I may come back to in the future. The idea was that any panel would by default be 'live' showing the state at a current drawcall. If you wanted though, you could lock any panel to a view at a particular event. That way you could have a live view and multiple locked views, and more easily compare the state between different points in time. It was way too complex to implement that early in the project though, so it was eventually removed.

The pipeline state view was particularly hard to get right. No matter how you slice it, it throws a crap ton of information at you all at the same time. If you split up the information too much and make people scroll and expand then it becomes clumsy and annoying to navigate it. In the end I went with a fairly straightforward set of tables, split up by (roughly) the pipeline state for each. It's not ideal but at least it's easy to find what you want.

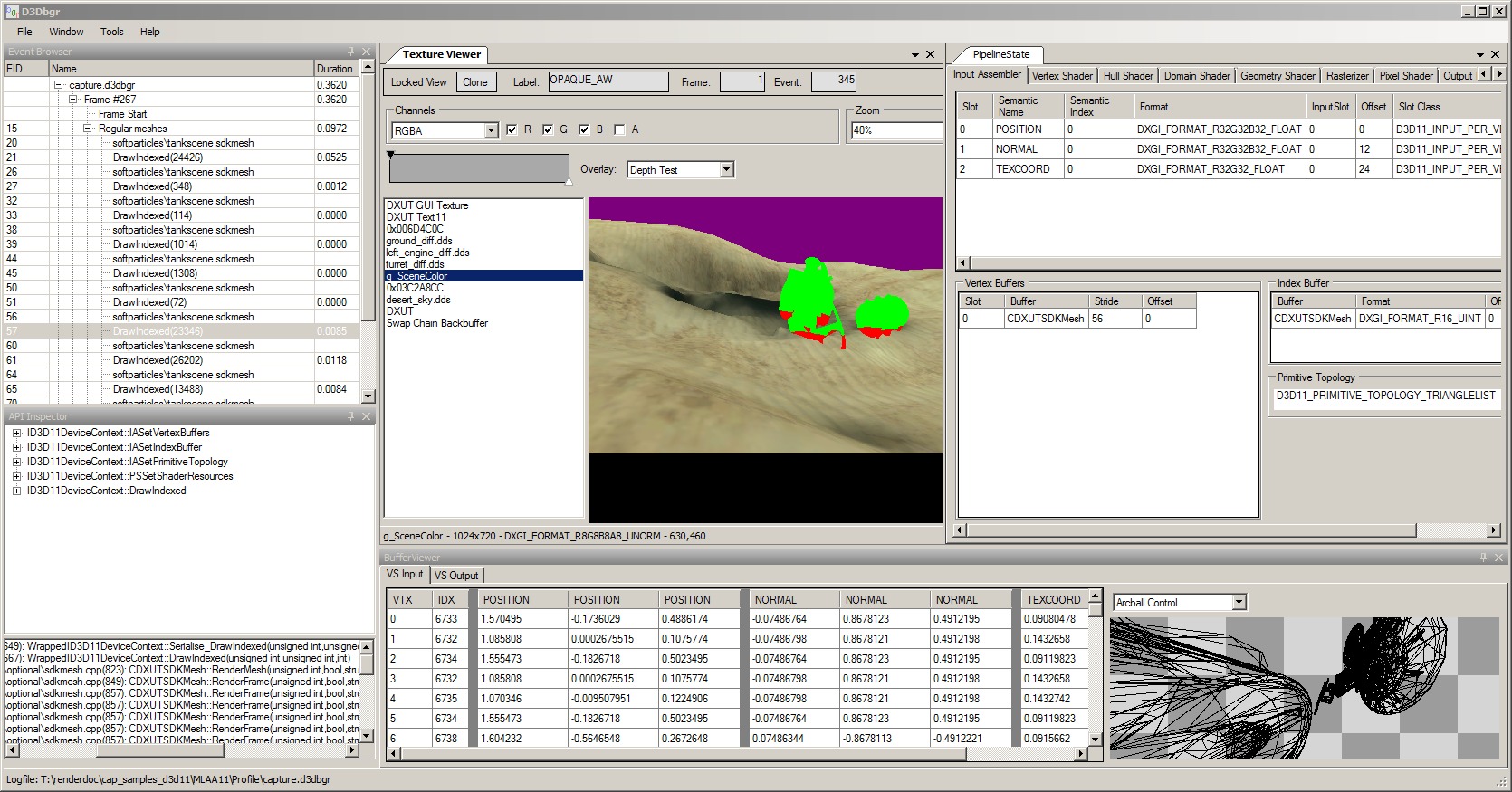

By now although it still wasn't at a place where it could be used at work I was beginning to move step by step towards that goal. At the end of 2012, after about 6 months, you can definitely see the recognisable form of what would become RenderDoc:

There was still a lot of work to do though on both the UI and the back-end. While most of the UI core functionality was present, it still needs a lot of polish and user-friendly tweaking. For example although it's possible to pick pixel values in the above screenshotted version, there's nothing anywhere to tell you how (it's the same right click shortcut you have now - I added multiple hints by the first public version to draw your attention).

Work continued apace in spare evenings and weekends until a tag labelled 'alpha1' on 27th of May 2013. This was almost a year after the project started, and I believe this was the first time that I was able to capture one of our CryEngine projects. Unfortunately by this time Crysis 3 had actually shipped so the project didn't actually help me at all with debugging on that project, but I can safely say that it helped myself and others on later projects!

Leading up to this alpha milestone, I had been working a lot on battle testing the API support. I started capturing first small D3D11 tutorials and demos, then more complex demos, then finally full shipped commercial games. Around this time, Unity had a DirectX 11 competition to advertise the new DX11 support in Unity 4. Although the underlying renderer code was the same, these demos were invaluable in providing more complex use-cases than simple tutorials to test my code against.

Testing against games in particular was quite an interesting challenge. Since I didn't have any source or symbols (of course!) whenever I hit a crash it sometimes took a lot of guesswork before the problem was revealed. In particular without naming any names I know of one title that shipped with a D3D11 threading race-condition bug, that only actually manifested itself when RenderDoc was attached!

Either way, over the course of April and May 2013 I slowly improved support until I was able to capture every D3D11 game I could get my hands on. Some of these had common requirements - e.g. a few games used deferred contexts, and implementing support for that instantly fixed several titles. A very common one was mapping a large buffer many times with MAP_NO_OVERWRITE, which if saved in its entirety quickly ran out of memory. Others simply had edge cases they were hitting with a particular set of calls that no other game had hit (so far).

The year of alphas

The first alpha build I brought in was worse than the tools I hoped to displace. It could barely stay up without crashing for 5 minutes, you practically had to use it while holding your breath to avoid knocking it over.

It did in theory work though, you could capture the game (only on a test level! Don't go crazy...) and poke through the resulting commands and state. Some brave folks tried to use it and I got a lot of very useful feedback at this early stage, but it was still pretty early to use daily as a primary tool.

The next few alpha builds came thick and fast - alpha4 came about 10 days after alpha1. Each of these was targeted incremental improvements to fix things people were running into on the builds, and fixing the kind of low-hanging fruit that you never noticed but someone else can see instantly.

There was one problem though - the name. As much as I liked the D3Dbgr name, no-one could remember it and generally didn't use it when referring to the tool. It was clear I needed to pick a better name for release. You might think this is where I came up with RenderDoc, but actually the tool had another name before that. It even had a kick-ass new icon a friend of mine designed with a neon pyramid. So just before the 1 year anniversary of the first commit, the project was renamed to PolyRAD.

I started also doing some long-form user tests. These would come as me bringing in a sample capture from a demo or game, and sitting for 15-20 minutes over lunch with the other graphics coders and looking through it to see how it worked.

At first these sessions were difficult - frequently running into crashes or broken features or bad replay. Then gradually as these issues got ironed out, the builds started to become more stable. We cheered the first time it got through 20 minutes of analysis without crashing a single time. I also got to see how other people used the tool, and noticed where they got stuck or confused.

Soon people were using the tool regularly and were actually able to get work done - initially they'd let me know that they successfully debugged an issue with it. After a while, that was just considered the norm and instead people let me know when they ran into a bug.

It's hard to pinpoint exactly where this flipped around from 'mostly broken' to 'mostly working', but at some point in the summer of 2013 I realised the tool would actually serve our needs and could be customised with any feature we wanted (within reason!). I wasn't sure if anyone else would be interested in an internally developed tool that I'd written in my spare time, but I wanted to be able to share it and open source it. I set a goal of September 2013 for the initial release, and split that up into a series of achievable features and improvements I wanted to make aside from any stabilising and bug fixing that I could manage.

The road to release

In late August of 2013 the builds were really coming together, and after alpha12 I followed it up with v0.13 - the same versioning number scheme that continues today more than 20 builds later. If you ever wondered why the first public release was v0.18, now you know!

Part of the reason for this change was that the build was going out externally for the first time. With the help of Tiago Sousa who knew many other graphics programmers in the industry at large, the first build was sent out to a handful of coders at other gamedev studios, asking for feedback in advance of a public release.

Apart from one or two replies I don't know that many of them actually found the time to test the tool. This isn't surprising especially since it was kind of out-of-the-blue from a studio otherwise not known for standalone tools. I did hear back useful feedback from a couple of external people that I sent it to myself, but otherwise the testing all continued to be in-house.

I also started talking internally about how to release the tool. v0.14 was baked on the 24th of September and was basically a release candidate ready for publishing. Unfortunately due to a variety of factors, nothing much happened and the release process kind of came to a standstill. Although I continued to improve the tool based on internal feedback, it was unclear when or if it would be released publicly.

As part of continuing this process I was asked to rename the project from PolyRAD, as this was already the name of a trademarked item. Although the name was well entrenched at that point (unlike the ill-fated D3Dbgr), the project was renamed to the now familiar RenderDoc.

At the time while the delay was annoying, I had no real idea whether or not the tool would get much traction beyond the walls of our offices. Sometimes when you build something to scratch your own itch, it really is only applicable to your use-case and becomes just a custom tool in your toolbox.

It wasn't until Valve announced VOGL at Steam Dev Days in early February 2014 that things really started moving again. A short time after that a website and announcement were arranged, and RenderDoc was publicly released at the end of February. The source code was initially private while the details of licensing were sorted out, and then a month later the github source repository was updated and things really kicked off.

From small beginnings

The focus of this post has been on the early start I got, up to the point of the first public release. The story doesn't end there, but since then I'm happy that everything has happened publicly in the open so there's less of a new story to tell.

It still surprises me sometimes to see how widely used RenderDoc is now. In my mind it's still the scrappy little hobby project I started in my evenings, but it's grown beyond anything I would have predicted. Originally my measure of success was being able to use it myself (or possibly with other colleagues) on titles I was working on. Today, I'm contracting with Valve and working full-time on future improvements and additions to the program.

It's certainly been a wild ride since initial public release which was Windows only and DX11 only, to the APIs and platforms now supported. Looking forwards there is still a whole load of things I can do to improve support.

I'm not really sure what exactly the point of all of this text was, so I appreciate anyone who's made it through this far! Hopefully this has been interesting from a historical perspective.

There's one other thing I'd like to be clear on. Without seeming self-effacing I truly believe that RenderDoc is not some great unachievable and unique accomplishment. I've gotten here partly by just sticking with it for long enough, largely by having the sheer dumb luck to start the right project at the right time, both of which could have happened to anyone. The biggest factor however is I had the right people to support me along the path. If I hadn't had the help that I've had then RenderDoc probably would never have seen the light of day, or if it had then it wouldn't have had nearly the quality that it does.

Specifically I'd like to thank Chris Bunner, James Chilvers, and Andrew Khan from the graphics team at Crytek UK R&D, who put up with the worst of the worst when I inflicted early builds no them, and they stuck through it. I'd also like to thank Jake Turner and Tiago Sousa without whom I think I'd still be waiting to find out if a public release would happen.

Any questions or comments, feel free to tweet at or email me. And of course if you have any issues or suggestions for RenderDoc then github is the place to go to.